The Ultimate Web Analytics Data Reconciliation Checklist

Ideally you should only have one web analytics tool on your website.

Ideally you should only have one web analytics tool on your website.

If you have nothing and you are starting out then sure have a few different ones, stress test them, pick the one you love (just like in real life!), but then practice monogamy.

At the heart of that recommendation is a painful lesson I have learned: It is a long hard slog to convert an organization to be truly data driven.

And that's with one tool.

Having two tools just complicates life in many subtle and sub optimal ways. One "switch" commonly occurs is the shift from fighting the good fight of getting the organization to use data to bickering about data not matching, having to do multiple set of coding for campaigns (the page tagging work itself is trivial) and so on and so forth.

In a nutshell the efforts become all about data and not the quest for insights.

So if you can help it, have one tool. Bigamy atleast in this case is undesirable. [If that does not convince you remember the magnificent 10/90 rule from May 2006 when I was but a naïve web analytics Manager.]

But.

Pontification aside the reality is that many people run more than one tool on their website (though hopefully they are all on their way to picking the best of the lot). That means the bane of every Analyst's existence: Data reconciliation!

It is a thankless task, takes way more time then needed and the "game" is so rigged that 1] it is nearly impossible to get to a conclusion and 2] it is rarely rewarding – i.e. worth it.

But reconcile we must. So in this post I want to share my personal checklist of things I look for when going through a data reconciliation exercise. Usually this helps get things to within 95% and then I give up. It is so totally not worth it to get the rest!

* This post is a bit technical, but a Marketer should be able to understand it. And my goal is you smile three times while you read it, either from the inside jokes or from the sheer pain on display! *

So if you are starting a data reconciliation project for your web analytics tools, make sure you check for these things:

#1: Comparing Web Logs vs. JavaScript driven tools. Don't.

#2: First & Third Party Cookies. The gift that keeps giving!

#3: Imprecise website tagging.

#4: Torture Your Vendor: Check Definitions of Key Metrics.

#5: Sessionization. One Tough Nut.

#6: URL Parameter Configuration. The Permanent Tripwire.

#7: Campaign Parameter Configuration. The Problem of the Big.

#8: Data Sampling. The Hidden "Angel".

#9: Order of the Tags. Love it, Hate it, Happens.

Intrigued? Got your cup of coffee or beer? Ready to become sexycool?

Let's deep dive. . . .

#1: Comparing Web Logs vs. JavaScript driven tools. Don't.

I know, I know, you all get it. Yes you understand that this is not just comparing apples and oranges but more like comparing apples and monkeys.

For the five of us that are not in that camp: these two methods of collecting data are very different, the processing and storage is different, the things that impact each are very different.

So if you are using these two methods then know that your numbers might often not even come close (by that I mean within 85 – 90%).

The primary things that web logs have to deal with are effective and extensive filtering of robots (if you are not doing this you are screwed regardless), the definition of unique visitor (are you using cookies? just IP? IP + User Agent ids?) and, this is increasingly minor, but data caching (at a browser or server level) can also mean missing data from logs.

There is also the Very Important matter of Rich Media content: Flash, Video, Flex, Apps whatever. Without extensive custom coding your weblogs are clueless about all your rich media experience (time spent, interactions etc). Most Tag based solutions now come with easy to implement solutions that will track rich media. So if you have a rich media site know that that will cause lots of differences between numbers you get from logs and numbers you get from tags.

The primary things that afflict javascript tags, in this context (more later), are browsers that have javascript turned off (2-3% typical) and in that case will have their data missing from tag based files.

Be careful when you try to compare these two sources.

[Bonus Reading: The Great Web Data Capture Debate: Web Logs or JavaScript Tags?]

#2: First & Third Party Cookies. The gift that keeps giving!

Notice the sarcasm there? : )

It turns out that if you use first party cookies or third party cookies can have a  huge impact on your metrics. Metrics like Unique Visitors, Returning Visits etc.

huge impact on your metrics. Metrics like Unique Visitors, Returning Visits etc.

So check that.

Typically if you are 3rd party then your numbers will be higher (and of course wrong), compared to numbers from your 1st party cookie based tool.

Cookie flushing (clearing cookies upon closing browser or by your friendly "anti spyware" tool) affects both the same way.

Cookie rejection is more complex. Many new browsers don't even accept 3rd party cookies (bad). Some users set their browsers to not accept any cookies, which hurts both types the same.

We should have been done away with this a long time ago but many vendors (including paid!) continue to use third party as default. I was just talking to a customer of OmniCore yesterday and they just finished implementation (eight months!!) and were using third party cookies.

I wanted to pull my hair out.

There are rare exceptions where you should use 3rd party cookies. But unless you know what you are doing, demand first party cookies. If free web analytics tools now offer only first party cookies standard there is no reason for you not to use them.

End of soap box.

Check type of cookies, it will explain lots of your data differences.

[Bonus Reading: A Primer On Web Analytics Visitor Tracking Cookies.]

#3: Imprecise website tagging.

Other than cookies I think this is your next BFF in data recon'ing.

Most of us use javascript tag based solutions. In case of web log files the server atleast collects the minimum data without much work because that is just built into web servers.

In case of javascript solutions, sadly, we are involved. We the people!

The problem manifests itself in two ways.

Incorrectly implemented tags:

The standard javascript tags are pretty easy to implement. Copy / paste and happy birthday.

But then you can add / adjust / caress them to do more things (now you know why it takes 8 months to implement). You can pass sprops and evars and user_defined_values and variables and bacteria.

You should make sure your WebTrends / Google Analytics / IndexTools / Unica are implemented correctly i.e. passing data back to the vendor as you expect. Else of course woe be on you!

To check that you have implemented the tags right, and the sprops are not passing evars and that user defined values are not sleeping with the vars, I like  using tools like IEWatch Professional. [I am not affiliated with them in any way.]

using tools like IEWatch Professional. [I am not affiliated with them in any way.]

[Update: From my friend Jennifer, if you are really really into this stuff, 3 more: Firebug, Web Developer Toolkit & Web Bug.]

Your tech person can use it and validate and assure you that the various tools implemented are passing correct data.

Incompletely implemented tags:

This one's simple. Your IT department (or brother) implemented Omniture tags on some pages and Google Analytics on most pages. Well you have a problem.

Actually this is usually the culprit in a majority of the cases. Make sure you implement both tools on all the same pages (if not all the pages on the site).

Mercifully your tech person (or dare I say you!) can use some affordable tools to check for this.

You would have noticed in my book Web Analytics: An Hour A Day I recommended REL Software's Web Link Validator. I continue to like it. Of course WASP, from our good friend Stephane, did not exist then and I am quite fond of it as well.

If you want to have a faster reconciliation between your tools, make sure you have implemented all your analytics tools correctly and completely.

[Bonus Reading: Web Analytics JavaScript Tags Implementation Best Practices..]

#4: Torture Your Vendor: Check Definitions of Key Metrics.

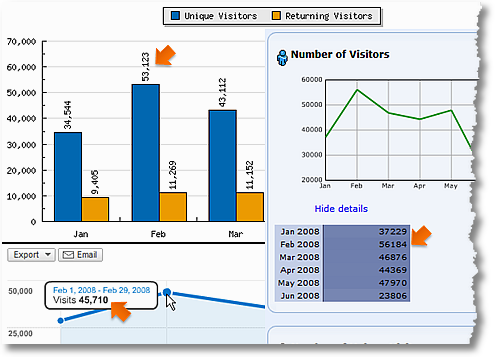

Perhaps you noticed in the very first image that StatCounter, ClickTracks and Google Analytics were showing three completely different numbers for Feb. But notice that they also all give that metric a different name.

Visits. Visitors. Unique Visitors. For the same metrics, "sessions".

How exasperating!

As an industry we have grown organically and each vendor has hence created their own metrics or at other times taken standard metrics, and just to mess with us, decided to call them something else.

Here, honest to God, are three definitions of conversion rate I have gotten from web analytics vendors:

Conversion = Orders / Unique Visitors

Conversion = Orders / Visits

Conversion = Items Ordered / Clicks

What! Items Ordered / Clicks? Oh, the Humanity!

So before you tar and feather a particular web analytics tool (or worse listen to the vendors talking points) and decide which is better, torture them to understand exactly what the precise definition is of the metric you are comparing.

It can be hard.

Early in my career (just a few years ago, I am not that old!) I called the top vendor and tried to get the definition of Unique Visitor. What I saw on the screen was Daily Unique Visitor. I wanted to know if it was the same as, my tool, ClickTracks's definition (which was count of distinct persistent cookie ids for whatever time period choosen).

The VP's answer: "What do you want to measure? We can do it for you."

Me: "I am looking at Unique Visitors in CT for this month. I am looking at Unique Visitors for that month in OmniCoreTrends, I see a number, it does not tie."

VP: "We can measure Monthly Unique Visitors for you and add it to your account."

Me: "What if I want to compare Unique Visitors for a week?"

VP: "We can add Weekly Unique Visitors to your account."

Me: (Getting impressed at the savviness at stone walling) "What your definition of Unique Visitors?"

VP: "What is it that you need to measure? We can add it to you account."

You have to give her / him this: they are very good at their job. But as a user my experience was bad.

Even if a metric has the same name between the tools check with the vendor. It is possible you are comparing apples and pineapples.

Torture your vendor.

[Bonus Reading: Web Metrics Demystified, Web Analytics Standards: 26 New Metrics Definitions.]

#5. Sessionization. One Tough Nut.

#5. Sessionization. One Tough Nut.

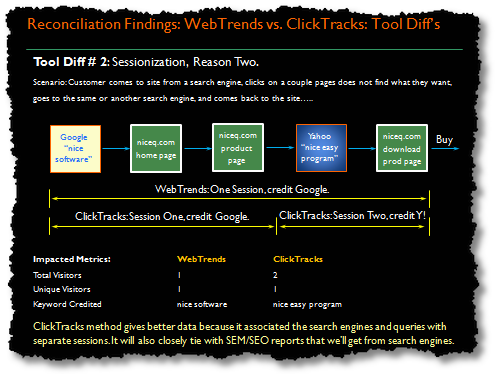

You can think of this as a unique case of a metric's definition but it is just so important that I wanted to pull it out separately.

"Sessions" are important because they essentially measure the metric we know as Visit (or Visitors).

But taking your clicks and converting that into a session on the website can be very different with each vendor.

Some vendors will time out session after 29 minutes of inactivity. Some will do that after 15 mins. Which means right there you could be looking at the number 1 in visits or the number 2.

Here's another place where how a vendor does sessionization could be a problem:

The fact that someone went to a search engine and came back to your site "breaks" the first session and starts another in one tool, but not the other.

One last thing, check the "max session timeout" settings between the tools. Some might have a hard limit of 30 mins, others have one (in using a top paid tool I found Visits that lasted 1140 mins or 2160 mins – visitors went to the site, left the page open, came back to work, clicked and kept browsing, or came back after the weekend).

Imagine what it does to Average Time on Site!

Probe this important process because it affects the most foundational of all metric (Visits or Visitors – Yes they are the same one, aarrrrhh!).

[Bonus Reading: Convert Data Skeptics: Document, Educate & Pick Your Poison.]

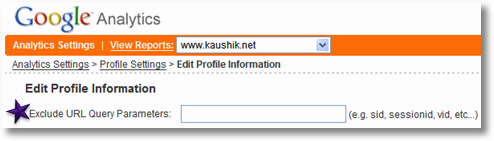

#6. URL Parameter Configuration. The Permanent Tripwire.

Life was so sweet when all the sites were static. URL's were simple:

http://www.bestbuy.com/video/hot_hot_hottie_hot.html

It was easy for any web analytics tool to understand visits to that page and hence count page views.

The problem is that the web became dynamic and urls for web pages now look like this:

http://www.bestbuy.com/site/olspage.jsp?id=abcat0800000&type=category

(phone category page)

or

http://www.bestbuy.com/site//olspage.jsp?id=1205537515180&skuId=8793861&type=product

(particular phone page)

or

http://www.bestbuy.com/site/olspage.jsp?skuId=8793861&productCategoryId=abcat0802001&type=product&tab=7&id=1205537515180#productdetail

(same phone page, clicked on a tab on that page)

The problem is that while web analytics tools have gotten better and can probably understand that first page (phone category page), it is not quite as straight forward for the next two.

They contain "tracking parameters" or "system parameters" (crap from the server) or other junk. Different pieces of information, some worth ignoring and others you ignore at your own peril.

Your web analytics tool has a hard time taking all these pieces and painting the right portrait (or count the page views correctly).

So what you have to do is sit down with your beloved IT folks and first you spend time documenting what all the junk in the url is. Things like skuId, productCategoryId, type, tab, id.

Some of these make a web page unique, like say skuId, productCategoryId and tab. I.E. their presence and values contained mean its a unique page. So skuId=8793861 means one phone and skuId=8824739 is another.

But there will be some that don't mean anything. For example it does not matter if type=product is in the URL or not.

Here's your To Do: Go teach your web analytics tool which parameters to use and which to ignore.

And here's how that part looks like for Google Analytics. . . .

In ClickTracks it is called "masking and unmasking" parameters. In Omniture its called josephine. I kid, I kid. :)

If you don't do this then each tool will try to make their own guesses. Which means they'll do it imprecisely. Which means they won't tie. Much worse they'll be living in the land of "truthiness"!

And make sure you do the same configuration in both the tools! That will get you going in terms of ensuring that the all important Page Views metric will be correct (or atleast less inaccurate).

I won't touch on it here but if you are using Event Logging for Web 2.0 / rich media experiences it adds more pain.

Or if you are generating fake page views to do various things like tracking form submissions or to track outbound links (boo Google Analytics!) or other such stuff then do that the same way between tools.

Just be aware of that. By doing the right config for your URL parameters in your web analytics tool you are ensuring accurate count of your page views, and across all the tools you are comparing. Well worth investing some effort for this cause.

[Bonus Reading: Data Mining And Predictive Analytics On Web Data Works? Nyet!]

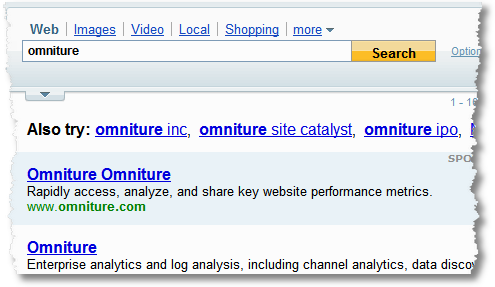

#7. Campaign Parameter Configuration. The Problem of the Big.

Ok maybe all of us run campaigns. But the "big" do this a lot more.

If you run lots of campaigns (Email, Affiliates, Paid Search, Display, Mobile, etc) then it is very important that you tag your campaigns correctly and then go configure your web analytics tools correctly to ensure your campaigns are reported correct, your referrers are reported correctly, your revenue and conversions are attributed correctly.

Here is a simple example.

If you search for Omniture in Yahoo:

[To the person running Omniture's paid search campaigns: I realize Omniture is important but you might reconsider mentioning your company's name twice! I have seen this ad on Yahoo for months (yes I search for Omniture that much!) . :)]

You end up here:

If you search for Omniture on Google, you end up here:

http://www.omniture.com/static/278?s_kwcid=omniture|2109240905&s_scid=omniture|2109240905

You'll note that Omniture's done a great job of tagging their campaigns. Absolutely lovely. Now. . . .

Let's say Omniture is using WebTrends and IndexTools on their website to do web analytics. Then they would have to go into each of those tools and "teach" them all campaign parameters they are using, the hierarchies and what not.

That will ensure that when they click on Paid Search tab / button / link in the tool that these campaigns will be reported correctly.

You'll have to repeat this for your affiliate and email and display and all other things you are doing.

If you have two tools you'll have to do it twice. And each tool might not accept this data in the same way. For WebTrends you might have to place it in the URL stem, in IndexTools you might have to put it in the cookies, in Google Analytics it might have to be a customized javascript.

Suffice it to say not a walk in the park. (Now you'll understand why clean campaign tracking is the hardest thing to do, see link immediately below.)

[Bonus Video: Evolve Intelligently: Achieve Web Analytics Nirvana, Successfully.]

#8. Data Sampling. The Hidden "Angel".

This is a problem (see "angel" :)) that many people are not aware of, and under estimate in terms of its impact.

But I want to emphasize that it will, usually, only impact large to larger companies.

But I want to emphasize that it will, usually, only impact large to larger companies.

There will be more about sampling at the link at the end of this section. But in a nutshell there are two kinds of sampling in web analytics.

Data Sampling at Source:

Web Analytics is getting to be very expensive if you are a site of a decent size.

If you are decent size (or plus some) then a typical strategy from the paid web analytics vendor is not to collect all your data – because your web analytics bill is based on page views you send over.

So you don't tag all your pages or you tag all your pages but they only store a sample of data.

This can cause a data reconciliation issue.

Data Sampling at "Run Time":

In this case all the data is collected (by your free or paid tool) but when you run your reports / queries it will be sampled to make it run fast.

Sometimes you have the control over the sampling (like in ClickTracks) and at other times not quite (like in Omniture Discover or WebTrends Marketing Lab etc) and at other times still no control at all (like in Google Analytics).

Sampling at "run time" is always better because you have all the data (should you be that paranoid).

But as you can imagine depending on the tool you are using data sampling can greatly impact the Key Performance Indicators you are using. This means all / none / some of your data will not reconcile.

So investigate this, most vendors are not as transparent about this as they should be, push 'em.

[Bonus Reading: Web Analytics Data Sampling 411.]

#9. Order of the Tags. Love it, Hate it, Happens.

This, being the last one, is not the hugest of deals. But on heavily trafficked websites, or ones that are just heavy (can sites be obese?), this can also affect the differences in the data.

As your web page starts to load the tags are the last thing to load (a very good thing, always have your tags just above the [/body] tags, please). If you have more than one tag then they get executed in the order they are implemented.

Sometimes on fat pages some of the tags might just not get executed.

Sometimes on fat pages some of the tags might just not get executed.

It happens because the user has already clicked. It happens because you have custom hacked the bejesus out of the tag and it is now a obese tag, and does not let the other, Heidi Klum type sexy and lean tags load in the time available.

If you want that last amount of extra checking, switch the order of the tags and see if it helps. It might help explain the last percent of difference you are dying to get. :)

That's it! We are done!! Well I am. :)

I suspect you'll understand a lot better why I recommend having just one tool (after rigorous evaluation of many tools) and then actually spending time creating a data driven organization.

Ok its your turn now.

What did I miss? What are other things you have discovered that can cause data discrepancies between tools? Which one of the above nine is your absolute favorite? Cookies? URL's? Page Parameters?

Please share your own delightful experiences, insights and help me and our community.

Thank you.

PS:

In case you are in the process of considering a web analytics tool, here is my, truly comprehensive (more than you ever wanted to know) guide through the process:

- How to Choose a Web Analytics Tool: A Radical Alternative

- Web Analytics Tools Comparison: A Recommendation

- Video: Web Analytics Vendor Tools Comparison (And One Challenge)

- Find You Web Analytics Soul Mate (How To Run An Effective Tool Pilot)

- Web Analytics Tool Selection: 10 Questions to ask Vendors

- Web Analytics Tool Selection: 3 Questions to ask Yourself

- Negotiating A Web Analytics Vendor Contract? Check SLA’s

and a bonus

November 6th, 2008 at 03:09

Again a great and consistent article! Thank you Avinash.

But..

How about browser/mobile support? Will the data gathered from mobile devices implicate the same storage mechanisms than data from regular sources (eg. pc's)?

November 6th, 2008 at 04:32

Hi, terrific post.

You talk about fat pages, affecting execution of tags. How would you define a fat page? Any rule of thumb?

Also, I would presume that the visitor connection speed plays a part on this?

And the hosting of the website ?

Many thanks in advance for your specific reply.

Jon

November 6th, 2008 at 04:51

Sampling v table length limiting – one of my favourite topics of discussion. It's just a shame nobody else is interested in it.. *sigh.

Loaded question – but how big are the data tables before Google starts sampling? And do they sample the long tail, or the whole table?

You didn't really touch on how backend databases match up with analytics tools (although that kind of comes under log file v javascript).

Nice article, as always Avinash.

November 6th, 2008 at 06:41

Very good article Avinash, very helpful for me as I'm researching different web analytics packages right now for use in my organization. Definitely helps to clear up some questions I've had for a long time.

November 6th, 2008 at 06:46

What did I miss?

From my experience, a sometimes bigger speed-bump down the road of web analytics implementation success is the disconnect between company owner or marketing person and their IT / Web Development department or 3rd party company. It amazes me how painstakingly difficult it is a lot of times to get one ROI tag copied / pasted onto a single page's source code, let alone a site-wide or ecosystem-wide coding implementation. Granted, some reasons why this is are contractual in nature, but a lot of times it's a big challenge to pass instructions and coding samples back and forth to multiple groups of people. It can go smoothly, and sometimes it does, but I would advise patience (and good communication / good relationships with your IT team)

What are other things you have discovered that can cause data discrepancies between tools?

You mentioned this already to some extent – how about configuration settings being different from tool to tool? For example if you are filtering out your own internal traffic in Google Analytics with a nice advanced RegEx Filter – are you doing the same in WebTrends? If you are excluding referral traffic from a bad spam site in SiteCatalyst, are you excluding it in your IndexTools account? Do you have the same Goals / Outcomes / Conversion Points set up everywhere? If you are using multiple tools, and if you are comparing a tool vs. a tool, then you need to do whatever you can to configure the tools as close as you possibly can to each other IMO.

Which one of the above nine is your absolute favorite?

#3 – Imprecise Website Tagging.

Thank you!

November 6th, 2008 at 08:00

Avinash – your post makes me feel like I'm barely touching the tip of the iceberg. The funny thing is that for most clients, big and small, that's OK….we can get plenty far with the simple stuff. I was surprised to learn that we should only use one data source. It seemed like as an engineer, we were always taught to get multiple data sources to check the validity of them, but with the vast amount of variances between how the tools work and define the data, you really can't compare them side by side. However, I have installed Clicky and GA on the same sites, and was surprised to see that the numbers were within 10-15% of each other. The problem would be if they were "way off", which one would I believe?

November 6th, 2008 at 09:02

Avinash,

Thanks for the informative and very timely (for me) blog post. My clients have been throwing some questions at me lately and I've been spending a great amount of time trying to answer questions for them in this realm.

I think that the mindset that Ryan refers to in his comment is very much alive in the business world. There is the tendancy to check numbers against multiple and very different reporting sources. I'm not sure what is being taught in the academic environment on this topic today versus in the past. I think part of the solution to the problem is maybe better education of the differences in tools, in metrics, the impacts of filters, etc…

The message I get from clients is that we should strive for the 'right numbers.' Correct me if I'm wrong here…My belief is that each tool provides the 'right number' given the filters, methodology, metrics definition, etc…applied to it's specific environment. I see web analytics tools as trending tools. It's not so much about having the 'right numbers' as it is about providing a consistent environment with consistent tracking configurations (tagging, sessionization, filters, definition of a day, etc…) to trend performance over time. Given all of these variables is tracking remaining the same this month versus last month…did we do better this month? Agreed…first party cookies help get us closer to the 'right numbers' though.

Since good news is rewarded in business, I think that it's probably a common problem (for people like us) to have clients want to look at multiple tools and then heavily desire to report on whichever source is reporting the highest numbers. Because higher numbers are good news and can lead to higher bonuses! I'm trying to reconcile for the business why the panel-based vendors (compete.com, comscore, nielsen, etc..) are reporting such vastly different (and higher…and therefore more desireable) numbers than our web analytics solution. You and I know we are talking about comparing moons to tricycles now. Some of your earlier posts have talked about data reconciliation and have been helpful in building my case and just to know what to chase. This posting is complimentary to those earlier posts and goes further in depth.

Thanks!

November 6th, 2008 at 09:10

Avinash,

Loved the OmniCoreTrends reference!

Rob

November 6th, 2008 at 11:33

Thanks for the post Avinash. I feel more and more like a novice every time I read your articles. Fantastic!!

November 6th, 2008 at 11:54

#4: Torture Your Vendor: Check Definitions of Key Metrics.

Google Analytics is shocking at providing definitions of metrics. Take for example Absolute Unique Visitors. The "About this report says" – How many people came to your site? This report graphs people instead of visits.

And when you get to the common questions section you get this:

"The initial session by a user during any given date range is considered to be an additional visit and an additional visitor. Any future sessions from the same user during the selected time period are counted as additional visits, but not as additional visitors."

Unfortunately nowhere is a 'user' defined. Also when rolling up daily, weekly and monthly figures how is this calculated? Are the uniques, unique of the time period? So many questions but so few answers :-(

Glen

November 6th, 2008 at 13:06

Bertjan: I don't know that mobile would affect one tool in a particularly worse way than another.

So all javascript based tools should be able to capture and show you visits from javascript enabled phones (86 from the iPhone to my blog yesterday according to GA!). If phones don't enable javascript then those tools are out of luck.

Ditto with log file based tools. They tend to capture more data, and if you are comparing different log files based tools then they should all report them the same (or you make sure they do! :)).

Alec: Here is a detailed answer to your question about data sampling triggers, the hard working people in Google Support come to the rescue!

http://www.google.com/support/analytics/bin/answer.py?hl=en-il&answer=66084

Joe: Excellent point. I should add a #10: Cultural challenges to deal with while you do data reconciliation. :)

Ryan: For reasons mentioned in the post I think we should strive for one tool. Then use the energy we would put into comparing numbers to trying to drive change using numbers. If one day we achieve nirvana then we can go back and add more tools and play with numbers, since taking action will be on cruise control! :)

Jon: I think it depends.

I was at a Akamai event yesterday and regardless of the fatness of the page they will use their service to ensure that all around the world it is delivered in less than three seconds (by the way that is the magic number based on lots of research). In that case you can create a fat page and you are fine.

Else go for three seconds delivery to San Jose, New York, Jakarta, Bejing and Sao Palo. Then you are set. :)

Rich: Here's my tweet from a few days back: Ensure your WA strategy is to reduce data inaccuracy as much as possible. Don't focus on getting 100% accuracy. That does not exist.

I believe that.

There are no "right numbers". I am a part of a generation that believed that and we always tried to reach that goal (I grew up in the world of data warehouses and business intelligence and ERP and CRM systems). But painfully I have learned that you can either focus on that, or you can use the data you got. WA data gives you 900% more information than you have through traditional channels. It is only 90% "right", but the missing 10% is outweighed by the fact that you can now make decisions that are so much better informed.

It will take a while for the current crop of business leaders to "get it" – and sadly many many web analytics practitioners / consultants / vendors to get it. We need to realize there is more money to be made not peddling our services that make things "accurate" but rather peddling our ability to find raw awesome insights (whatever the tool the company has).

Glen: Torture the Google Analytics team. Period.

Start by using the Contact Us link at the bottom of every single Google Analytics report and complain (in 27 languages!). :)

The definition of absolute unique visitors in the GA report (that you quote in your comment) is embarrassing. Should be fixed.

But the GA Support team maintains this page with, most, of the Glossary:

http://www.google.com/support/googleanalytics/bin/topic.py?topic=11285

and in there you'll find a link to Absolute Unique Visitors:

http://www.google.com/support/googleanalytics/bin/answer.py?answer=33087&topic=11285

"Unique Visitors represents the number of unduplicated (counted only once) visitors to your website over the course of a specified time period. A Unique Visitor is determined using cookies."

GA remains one of the few tools (paid or free) that still calculates "absolute" unique visitors for any time period you want. Most tools will take your daily UV or weekly UV and total that up for the month. Or take the monthly UV and total that for the the time period you want.

Try this for your tool: compute unique visitors on your site from Oct 16th to Nov 5th.

They either: 1) can't do it. 2) will sum daily UV's or weekly UV's to give you the number (both wrong).

GA will compute absolute unique for any time period, including that one.

Ok now off to poke Alden to get him to fix the embarrassing definition in the google analytics product. :)

-Avinash.

November 6th, 2008 at 13:26

It is absolutely impressive Avinash how you can go from talking about a big picture strategic topic like innovation

http://www.kaushik.net/avinash/2008/10/redefining-innovation-incremental-side-effect-transformational.html

to talking about something micro picture topic like this post. From reinventing companies to url parameters.

So few people have this kind of depth and breadth in their expertise.

I tip my hat to you.

November 6th, 2008 at 15:32

Great post Avinash – this is particularly relevant for the thousands of clients that have switched from hitbox to site catalyst (like I have been experiencing). One big frustration that I have encountered for transitioning between these tools is a difference in how visitor attention metrics are reported. One tool is 'visit' based, and the other is 'visitor' based – this means its very hard to do year over year comparisons (oh, and that both report builders don't match up yet – omni's version lacks quite a few basic features from hitbox version).

Anyone else experiencing these issues? Its a tough one to solve, with no clear answers coming from Omniture.

Rich Page (a web optimization fanatic)

http://www.rich-page.com

November 6th, 2008 at 15:33

Sorry, I meant 'visitor retention' not 'visitor attention' – i.e. repeat visit metrics.

November 6th, 2008 at 17:08

[...] The Ultimate Web Analytics Data Reconciliation Checklist [...]

November 6th, 2008 at 20:18

Joe brought up a great point about Conversion Points being different. If tool A is measuring clicks or clicking sessions to a submit button and tool B is measuring page views or viewing sessions on the "thank you for submitting" page then the results for that same conversion point could vary wildly.

My favorite is metric definition.

The one I hate the most is inconsistent tagging…

November 6th, 2008 at 23:20

Hi Avinash,

I would like to know more about the #5. Sessionization. One Tough Nut.

Since whatever a counting of visits been observed by you in CT is that a same in GA.

I mean if any visitor, within a same session goes back to search engine and come back to the same site will it be a single visit in GA also.

Thanks a lot.

Bhagawat.

November 7th, 2008 at 03:24

[...] If you’re running more than one analytics tool, you need to read Avinash Kaushik’s tips for reconciling the data discrepancies because let’s face it, you’ve got ‘em. [...]

November 7th, 2008 at 08:32

hi avinash

a request , could you make your blog colorless and less graphic

i read it at work most of times, and its sure attracts attention..kind i do not want

i just unable to focus when i am home, got to many distractions ;-)

thanks

November 7th, 2008 at 11:36

Thanks for a great and relevant post; we are facing a similar issue here but not with two diff analytics vendors… moreso, it is looking at reports for visitor referrals/sales coming from our Commission Junction affiliates and Google PPC campaigns, and how they differ so drastically from the #s reported in our Coremetrics implementation. It's maddening but ultimately we rely on the G and CJ reports for visitors & sales from those channels. Not sure how we can otherwise rectify, due to cookie rejection issues, tracking code displacement which causes misattribution in CM, product feeds w/ no tracking assigned, etc…

p.s. PLEASE do NOT make your blog colorless and less graphics, it's what makes it so easy and fun to read!!!!!!

November 7th, 2008 at 12:57

Excellent post, it gives people a lot of things to think about (and everything they should be thinking about) when they go to implement analytics.

For applications to track data, I find Charles Proxy to be the cleanest and best, honestly. Live HTTP headers plugin is good for Firefox too, especially if you set it up to filter only on your analytics server.

Also, one thing that's easy to screw up for javascript tagging is cross-domain settings. Documentation is either sparse or confusing and even tech support usually gets it wrong. This could seriously affect First Party cookie data (check your entry pages for issues) and might force you to rely on Third Party until it gets resolved.

November 7th, 2008 at 14:40

@Bryan Cristina; Charles is a great tool and can be used to track non-webpage data as well (widgets, etc.) One of the other great things it does is allow you to re-write data on the fly. This helps with testing new tags and changing variables – You can change it in Charles and test it without touching your web server or application. This gets past having to deal with the Web team for every little testing change.

November 7th, 2008 at 14:51

Great post Avinash, you managed to make a very technical complicated topic simple. I am a director of a global analytics team and even I understood your post.

Let me also add that you should not make change the look and feel of your posts. The images you use bring about a emotional connection with what you write. Besides it is a refreshing change from all other antiseptic blogs.

November 7th, 2008 at 21:19

I'm looking for some added guidance here. I'm generally of the mindset that more data is better. From a CPG standpoint, we love having information from Nielsen and IRI.

The different web analytics packages offer unique tools. Some are better than others at certain things.

Unless having 2 pieces of software somehow cancels out each other or destroys the data, I'm not sure why having: Google Analytics, WebTrends, and Quantcast installed at the same time is a bad idea.

What I do advise my team and my clients that we need to agree on one method/tool/model for reporting, but that it's ok to have many sources of data.

November 7th, 2008 at 23:06

Adam: Please allow me to split the guidance into a couple of pieces.

First the Web Analytics 2.0 execution framework does mandate that you have multiple tools because it calls on you to focus on understanding The What, The How Much, The Why and The What Else. Each atleast one tool.

For Web Analytics 2.0 I call the successful tool strategy Multiplicity.

See this post: Multiplicity: Succeed Awesomely At Web Analytics 2.0!

Second, in the post below I was specifically referring to the optimalness of having more than one ClickStream ("The What") tools only.

I firmly believe you should strive to have just one. You can date a lot, marry just the one.

With regards to using WebTrends and Google Analytics at the same time, or Omniture and CoreMetrics at the same time. . . . answer this test :

1) Does your organization (or your clients) follow the 10/90 rule?

[Now it could be 30 / 70 or 20 / 80, but is it heavily weighted towards having resources compared to tools.]

2) Does your organization (or your clients) have mostly Reporting Squirrels or Analysis Ninjas?

[Is the norm churning up dead trees - just reports - or presenting insights that get actioned?]

If the answer to either question is No then dump one tool. You are not adding value to the business, why have two and have even more distraction. Focus on actionability and on the organization.

If the answer to both questions is Yes then it is a preliminary indicator that your organization is running on data, getting the extra 10% juice from having an additional tool might be worth it.

Remember after you pick one ClickStream tool, say CoreMetrics, Multiplicity demands you still have to have 4Q, Google Website Optimizer, Compete + Google Insights for Search + Google Ad Planner at the minimum.

If you are only using, in your case, WebTrends, Google Analytics and Quantcast, then it is better to dump WebTrends (seriously, did you expect me to say GA!) and go with 4Q or Website Optimizer. Your chances of improving your site will increase dramatically.

Hope this helps.

-Avinash.

November 8th, 2008 at 09:57

Re: Two mentions of 'omniture'. I believe OVKEY is the keyword they're bidding on, and OVRAW is what you typed in. If you can trigger the ad with something random like 'omniture aardvark' you should see two different values.

November 10th, 2008 at 03:42

[...] The Ultimate Web Analytics Data Reconciliation Checklist de Avinash Kaushik en Occam's Razor [...]

November 12th, 2008 at 10:05

Beautiful post Avinash – I published a whitepaper on accuracy earlier this year which covers all of these matters. I hope its of interest to your readers. Its vendor agnostic, though I must admit not as light hearted or humorous as yours…

http://www.advanced-web-metrics.com/accuracy-whitepaper

Best regards, Brian

November 18th, 2008 at 00:21

[...] The Ultimate Web Analytics Data Reconciliation Checklist [...]

November 19th, 2008 at 08:11

Hello Avinash,

I figured this post on data accuracy was the best fit for my comment.

I am experiencing a rather akward phenomenon, since I implemented 4Q (and ClickHeat) on my website.

On observing Google Analytics, I am receiving a very unusually high volume of direct traffic about 80 visits per day (my norm has always been 5 visits per day over the last 3 years), 95% of it bounces away.

Because my website receives about 2.5K visits per month, the direct traffic above skews all my dashboard numbers badly.

I am removing the codes of the services mentioned above one by one to find an eventually guilty one, but to no avail.

Also on the same day I implemented the codes above, I listed my company on poifriend.com, and I put my website url there – no link though. I thought maybe the direct traffic would come from visitors of POI friend who want to visit my page. I have removed the website url from the listing but the same continues to happen.

Could this be something completely different altogether? Robots? I notice Google has slightly changed analytics since beginning of November, maybe something going on there makes whats reported different from before?

Many thanks.

Daniel

November 19th, 2008 at 15:36

Daniel: It is difficult to imagine that 4Q would cause an increase in direct traffic. It works off your site and by the time the survey shows up the person's visit is already recorded on your web analytics tool (including referring information, like url's or search or email or whatever).

But an easy way to test your hypothesis is to remove both 4Q and ClickHeat (I am not very familiar with the latter unfortunately).

Listing your site on other websites where people will have to copy paste will cause increase in direct traffic (that's what it is supposed to be, typed in urls :)).

Search Robots don't execute javascript tags and hence won't be on your javascript driven website tools.

Nothing changed in Google Analytics recently would cause your direct traffic to go up, it was just a features release (without touching the data).

Direct traffic can be many things, see this post for all the things that fall into direct traffic (or things you might have done that could have caused an increase):

Diagnosing Direct Traffic in a Web Analytics Tool.

Hope this helps a little bit.

-Avinash.

January 5th, 2009 at 10:31

[...]

La presentación de informes en tiempo real, basados en grandes volúmenes de tráfico, son la principal justificación para la utilización de esta técnica que, siendo más precisos, presenta diferentes variantes que pueden dotarla de mayor protagonismo (recomiendo este post para profundizar en este tema).

La carrera de obstáculos, invita a pensar que la situación ideal pasa por la utilización de una única herramienta. Avinash hizo hace unas semanas un checklist muy interesante al respecto en su blog.

[...]

January 8th, 2009 at 20:40

[...]

Analytics superhero (and one of my five favorite people I’ve never met in person) Avinash Kaushik wrote a marvelous blog recently about reconciling conflicting data between different platforms. Reading it, of course, would scare the bejesus out of the web analytics beginner, and rightfully so. However, I was at odds when Avinash seemed to recommend taking one platform and running with it.

[...]

February 2nd, 2009 at 08:07

[...]

Los datos que venía obteniendo y en los cuales confiaba ciegamente, han cambiado de forma radical con una nueva herramienta. Este es un tema muy recurrente pero sige siendo una de las principales razones de la falta de confianza tras una nueva implementación. Para poder comparar con coherencia los datos de varias herramientas es necesario tener en cuenta muchos conceptos “internos” a cada solución: la forma de utilizar las cookies, las diferencias en el etiquetado, configuraciones de parámetros URL, dominios considerados internos, parámetros de campaña… Avinash ya nos ha ofrecido una clase maestra respecto a este problema y seguro que habéis podido disfrutarla.

[...]

May 26th, 2009 at 07:30

Hello, Avinash!

In point #2: First & Third Party Cookies. The gift that keeps giving! You wrote "Check type of cookies, it will explain lots of your data differences".

Is there any universal way to check type of cookies?

May 29th, 2009 at 23:38

[...] tips on how to sensibly approach data reconciliation, check out this post by Avinash Kaushik, Google's Analytics Evangelist, or this whitepaper on accuracy in Google [...]

July 16th, 2009 at 09:27

[...] The Ultimate Web Analytics Data Reconciliation Checklist [...]

July 23rd, 2009 at 21:14

[...] The Ultimate Web Analytics Data Reconciliation Checklist [...]

November 17th, 2009 at 21:19

[...] The Ultimate Web Analytics Data Reconciliation Checklist [...]

November 27th, 2009 at 06:18

[...] 如果你真的碰到这样的问题,就看看我们的数据协调清单,一个个的搞定。想要完全解决数据的协调问题是不可能的,做一个范围比如允许 10% 的接近,然后再继续进行下一个数据问题。 [...]

December 3rd, 2009 at 01:40

[...]

5 Lesser Known Google Analytics Features

Google Analytics is a great program that can do a lot more than most people realize. Here are a few features that you may not know about.

The Ultimate Web Analytics Data Reconciliation Checklist

[...]

March 31st, 2010 at 11:29

Hi Avinash,

I'm commenting on this article because I think it's the most relevant to what I'm trying to figure out — reconciling numbers from different analytics tools.

We have been using google analytics for a while, and now are trying to shift over to yahoo analytics. But the numbers are just so far apart that I'm banging my head on the monitor trying to tie them…

The strange thing is that they are pretty close in terms of total visits and pageview counts (3 and 20 percent off, respectively). But for some reason the allocation by traffic sources, for visits and pageviews, is off by about 50-100% for all the categories (direct, search, referral). For example, Yahoo has twice the amount of pages reported as "direct", while Google has three times more visits from "organic search". Makes absolutely no sense to me, since from all I can gather the metrics (traffic sources) are defined pretty much the same in both, and if pageviews/visits were defined radically different, the overall traffic totals would be much more off too, wouldn't they?

Have you come across this phenomena before? Am I missing something?

I'm trying to figure this out because according to Google Analytics, search engines are our primary source of traffic, while according to Yahoo Analytics they are the least important. You can see how depending on which one is closer to truth our whole online strategy can get affected.

Any thoughts?

Thanks,

Yulia

April 1st, 2010 at 07:12

Yulia: It is possible that your site is not tagged completely, specifically it looks like your search landing pages are missing the YWA tag.

It is impossible, yes impossible :), for GA and YWA to be that far apart. I run both on my blog and I have recommended both to many websites (who at least for some time ran both together).

There is nothing particularly magnificent about GA in how it collects data, or particularly magnificent about Yahoo! Web Analytics. They (and Omniture and WebTrends) use pretty much the exact came technology to collect data.

It might be helpful to do a site audit (use WASP or ObservePoint) and/or hire a authorized consultant to help you do an analysis of what the issue might be.

Avinash.

April 1st, 2010 at 08:10

Thanks Avinash. The tagging on landing pages turned out to be the problem. Busy fixing them all up :)

April 2nd, 2010 at 01:08

Hi Yulia

Also, have a read of this vendor agnostic whitepaper from me (updated last month):

http://www.advanced-web-metrics.com/understanding-accuracy

Also, more specifically I posted accuracy data on YWA v GA v SiteCensus:

http://www.advanced-web-metrics.com/blog/2008/12/16/web-analytics-accuracy-comparing-google-analytics-yahoo-web-analytics-and-nielsen-sitecensus/

HTH

Best regards, Brian

April 5th, 2010 at 09:02

Hi Brian,

Thank you very much for the links. Very helpful, especially the 2nd one! I was looking for something like that…

Thanks,

Yulia

April 9th, 2010 at 19:56

I have created a script called YMMV Real Web Stats that allows you to quickly get a Google Analytics Accuracy number. I have released it as open source as a hope that passionate coders/developers and analytics lovers can help me make it a great tool.It uses a control to calculate the accuracy of a Google Analytics installation compared to the items being blocked. It counts adblock, noscript and other ways Google Analytics will be blocked. If anyone is interested in getting the code it is available here:

http://sourceforge.net/projects/ymmv-webstats/

or here:

http://code.google.com/p/ymmv-webstats/

June 8th, 2010 at 08:10

[...] The Ultimate Web Analytics Data Reconciliation Checklist [...]