| * |

|

Techniques for Security Risk Analysis of Enterprise Networks

Summary:Today's information systems face sophisticated attackers who combine multiple vulnerabilities to penetrate networks with devastating impact. The overall security of an enterprise network cannot be determined by simply counting the number of vulnerabilities. To accurately assess the security of networked systems one must understand how vulnerabilities can be combined to stage an attack. We model such composition of vulnerabilities through attack graphs, which show all paths of vulnerability allowing incremental network penetration. We propagate attack likelihoods through the attack graph, yielding a novel metric that measures the overall security of a networked system such as interacting Web Services. From this, we score risk mitigation options in terms of maximizing security and minimizing cost. For practical implementation, we can rely on an existing attack graph generation tool. This tool populates attack graph models from live network scans and databases of reported vulnerabilities. As additional input to our model, we use comprehensive sources of security risk scores for individual vulnerabilities. Our flexible new attack graph metric model can be used to quantify overall security of networked systems, and to study cost/benefit tradeoffs for analyzing return on security investment. Description:1. Introduction At present, computer networks constitute the core component of information technology infrastructures in areas such as power grids, financial data systems and emergency communication systems. Protection of these networks from malicious intrusions is critical to the economy and security of our nation. To improve the security of these information systems, it is necessary to measure the amount of security provided by different networks configurations. The objective of our research is to develop a standard model for measuring security of computer networks. A standard model will enable us to answer questions such as “are we more secure than yesterday” or “how does the security of one network configuration compare with another one”. Also, having a standard model to measure network security will bring together users, vendors and researchers to evaluate methodologies and products for network security. Some of the challenging aspects of this project are as follows.

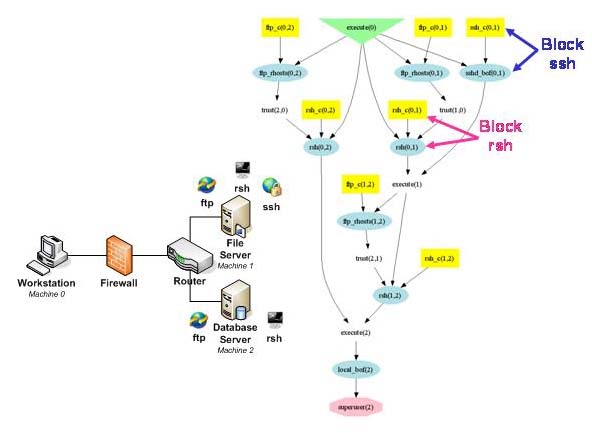

Good metrics should be measured consistently, are inexpensive to collect, are expressed numerically, have units of measure, and have specific context [1]. We meet this challenge by capturing vulnerability interdependencies and measuring security in the exact way that real attackers penetrate the network. We analyze all attack paths through a network, providing a metric of overall system risk. Through this metric, we analyze tradeoffs between security costs and security benefits. Decision makers can therefore avoid over investing in security measures that do not pay off, or under investing and risk devastating consequences. Our metric is consistent, unambiguous, and provides context for understanding security risk of computer networks. 2. Attack Graphs Attack graphs model how multiple vulnerabilities may be combined for an attack. They represent system states using a collection of security-related conditions, such as the existence of vulnerability on a particular host or the connectivity between different hosts. Vulnerability exploitation is modeled as a transition between system states. As an example, consider Figure 1. The left side shows a network configuration, and the right side shows the attack graph for compromise of the database server by a malicious workstation user. In the network configuration, the firewall is intended to help protect the internal network. The internal file server offers file transfer (ftp), secure shell (ssh), and remote shell (rsh) services. The internal database server offers ftp and rsh services. The firewall allows ftp, ssh, and rsh traffic from a user workstation to both servers, and blocks all other traffic. In the attack graph, attacker exploits are blue ovals, with edges for their preconditions and post conditions. The numbers inside parentheses denote source and destination hosts. Yellow boxes are initial network conditions, and the green triangle is the attacker’s initial capability. Conditions induced by attacker exploits are plain text. The overall attack goal is a red octagon. The figure also shows the direct impact of blocking ssh or rsh traffic (to the fileserver) through the firewall, i.e., preventing certain exploits in the attack graph.

The attack graph includes these attack paths:

The first attack path starts with sshd_bof(0,1). This indicates a buffer overflow exploit executed from Machine 0 (the workstation) against Machine 1 (the file server), i.e., against its secure shell service. In a buffer overflow attack, a program is made to erroneously store data beyond a fixed-length buffer, overwriting adjacent memory that holds program flow data. The result of the sshd_bof(0,1) exploit is that the attacker can execute arbitrary code on the file server. The ftp_rhosts(1,2) exploit is now possible, meaning that the attacker exploits a particular ftp vulnerability to anonymously upload a list of trusted hosts from Machine 1 (the file server) to Machine 2 (the database server). The attacker can leverage this new trust to remotely execute shell commands on the database server, without providing a password, i.e., the rsh(1,2) exploit. This exploit establishes attacker’s control over the database server as a user with regular privileges. A local buffer overflow exploit is then possible on the database server, which runs in the context of a privileged process. The result is that the attacker can execute code on the database server with full privileges. 3. Measuring Attack Likelihood Our attack graph metric quantifies this risk through measuring the likelihood that such residual paths may eventually be realized by attackers. When a network is more secure, attack likelihood is reduced. Preventing exploits (as in Figure 1) removes certain paths, in turn reducing attack likelihood. When the attacker cannot reach the goal, our metric is zero. When the attacker is completely assured of reaching the goal, the metric is unity. An attack graph naturally encodes both conjunctive and disjunctive attack relationships. For example, in Figure 1, the attacker cannot upload the list of trusted hosts if the ftp service does not exist; neither can this happen if the attacker cannot use Machine 1 as a normal user. Such a relationship is conjunctive. On the other hand, if a condition can be satisfied in more than one way, it does not matter which path the attacker follows to satisfy it, making the relationship disjunctive. To compute our metric, we propagate exploit measures through the attack graph, from initial conditions to goal, according to conjunctive and disjunctive dependencies. When one exploit must follow another in a path, this means both are needed to eventually reach the goal (conjunction), so their measures are multiplied, i.e., p(A and B) = p(A)p(B). When a choice of paths is possible, either is sufficient for reaching the goal (disjunction), i.e., p(A or B) = p(A) + p(B) – p(A)p(B). Paths coming into an exploit may form arbitrary logical expressions. In such cases, we propagate exploit measures through corresponding conjunctive/disjunctive combinations. Also, we resolve any attack graph cycles through their distance from the initial conditions, and remove any parts of the graph that do not ultimately lead to the goal. Our exploit measures could be Boolean variables, real numbers, or even probability distributions. Defining the actual measures (value or distribution) of each individual exploit is part of the ongoing effort of keeping our database of modeled exploits current. A number of vendors and open sources provide ongoing descriptions of reported vulnerabilities, which we can leverage for our model population. References

|

End Date:ongoingLead Organizational Unit:ITLStaff:Dr. Anoop Singhal Related Programs and Projects:For more information regarding the Measuring Security Risk In Enterprise Networks, please visit the Computer Security Resource Center (CSRC). Contact

Anoop Singhal 100 Bureau Drive |