Kent Anderson offers a provocative post in The Mirage of Fixity — Selling an Idea Before Understanding the Concept. Anderson takes Nicholas Carr to task for an article in the Wall Street Journal bemoaning the death of textual fixity. Here’s a quote from Carr:

Once digitized, a page of words loses its fixity. It can change every time it’s refreshed on a screen. A book page turns into something like a Web page, able to be revised endlessly after its initial uploading… [Beforehand] “typographical fixity” served as a cultural preservative. It helped to protect original documents from corruption, providing a more solid foundation for the writing of history. It established a reliable record of knowledge, aiding the spread of science.

To my mind, Anderson does a good job demonstrating that not only is “file fluidity” a modern benefit of the digital age, it has long existed in the form of revised texts, different editions and even different interpretations of canonical works, including the Bible. Getting to the root of textual fixity, according to Anderson, means getting extremely specific–”almost to the level of the individual artifact and its reproductions.”

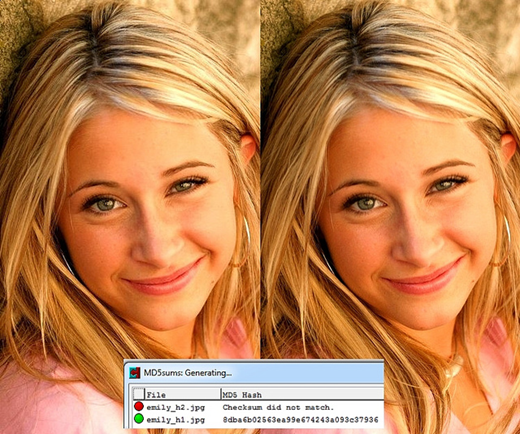

In the world of digital stewardship, file fixity is a very serious matter. It’s regarded as critical to ensure that digital files are what they purport to be, principally through using checksum algorithms to verify that the exact digital structure of a file remains unchanged as it comes into and remains in preservation custody. The technology behind file fixity is discussed in an earlier post on this blog; a good description of current preservation fixity practices is outlined in another post.

It is well and good to strive for file fixity in this context, and it is indeed “to the level of the individual artifact and its reproductions.” The question arises about the degree of fidelity that needs to be maintained with respect to the original look, feel and experience of a digital file or body of interrelated files. Viewing a particular set of files is dependent on a particular stack of hardware, software and contextual information, all of which will change over time. Ensuring access to preserved files is generally assumed to eventually require: 1) migrating to another format, which means that it will need to change it in some way by keeping some of its properties and discarding others, or 2) emulating the original computing environment.

Each has advantages and disadvantages, but the main issue comes down to the importance placed on on the integrity of the original files. Euan Cochran, in a comment on an earlier post on this blog, noted that “I think it is important to differentiate between preventable and non-preventable change. I believe that the vast majority of change in the digital world is preventable (e.g. by using emulation strategies instead of migration strategies).” He noted that the presumed higher cost emulation works against it, even though we currently lack reliable economic models for preservation.

I wonder, however, if the larger issue is that culturally we are still struggling with the philosophical concepts of fixity and fluidity. Do we aim for the kind of substantive finality that Carr celebrates or do we embrace and accept an expanded degree of derivation–ideally documented as such–in our digital information? Kari Kraus, in a comment on a blog post last week, put the question a different way:

[Significant properties] are designed to help us adopt preservation strategies that will ensure the longevity of some properties and not others. But if we concede that all properties are potentially significant within some contexts, at some time, for some audiences, then we are forced into a preservation stance that brooks no loss. What to do?

Ultimately I think wider social convention will determine the matter. Until then it makes good sense to continue to explore all the options open to us for digital preservation.