Rheology

Summary:

Rheology is the study of the flow properties of liquids. The flow properties of interest in our research are viscosity and yield stress. Viscosity is a measure of resistance to flow, where a high viscosity indicates a high resistance (e.g. honey) and a low viscosity indicates a low resistance (e.g. water). Yield stress, a related property, is a measure of the amount of force needed to initiate flow.

Our research focuses on the rheology of dense suspensions, specifically cement, mortar, and concrete. Understanding the flow properties of these dense suspensions, as well as other complex fluids such as colloids and ceramic slurries, is of importance to industry and presents a significant theoretical challenge. The computational modeling of such systems is challenging due to large range of length and time scales involved as well as the difficulty of tracking boundaries between the different fluid/fluid and fluid/solid phases.

We are currently studying the flow of cement paste, mortar, and concrete, within a 4-blade vane rheometer, which is a device designed to measure rheological properties. The raw output from a rheometer is the angular velocity of the blades and the torque applied to turn the blades. Current rheometers are not able to use these raw outputs to compute the flow properties of dense suspensions in fundamental units, such as 'Pa s' or equivalently 'kg/(m s^2), although for a given rheometer, they can give a relative measure of the viscosity. Our goal is to enable accurate measurement of the rheological properties of cement paste, mortar, and concrete, by linking the raw outputs from rheometers to the flow properties of these dense suspensions.

Additional Technical Details:

Simulating the Flow of Dense Suspensions

We employ two computational methods to simulate the flow of dense suspensions, with the choice depending on whether the matrix fluid of the suspension, that is, the fluid part of the suspension, is Newtonian or non-Newtonian. A fluid is Newtonian if it has a constant viscosity (for a given temperature), regardless of any shearing forces applied to it. A common example of a Newtonian fluid is water. A fluid is non-Newtonian if its viscosity (for a given temperature) is not constant but is a function of the shear rate. There are several categories of non-Newtonian fluids based on how their viscosity changes in response to shearing. For example, non-Newtonian fluids that exhibit a lower viscosity while being sheared, as opposed to at rest, are referred to as 'shear thinning fluids'. Conversely, non-Newtonian fluids that exhibit a higher viscosity when sheared are referred to as 'shear thickening fluids'.

For simulating suspensions comprising a Newtonian fluid matrix we use the computational method called Dissipative Particle Dynamics or DPD, invented in the early 1990s [Hoogerbrugge]. DPD is used, instead of the more direct models of Molecular Dynamics (MD) or Brownian Dynamics (BD), in order to study the physical behavior of systems of a larger size and for longer time intervals, by orders of magnitude, than would be possible with either MD or BD. Also, the tracking of boundaries between the different fluid/fluid and fluid/solid phases is made tractable with DPD.

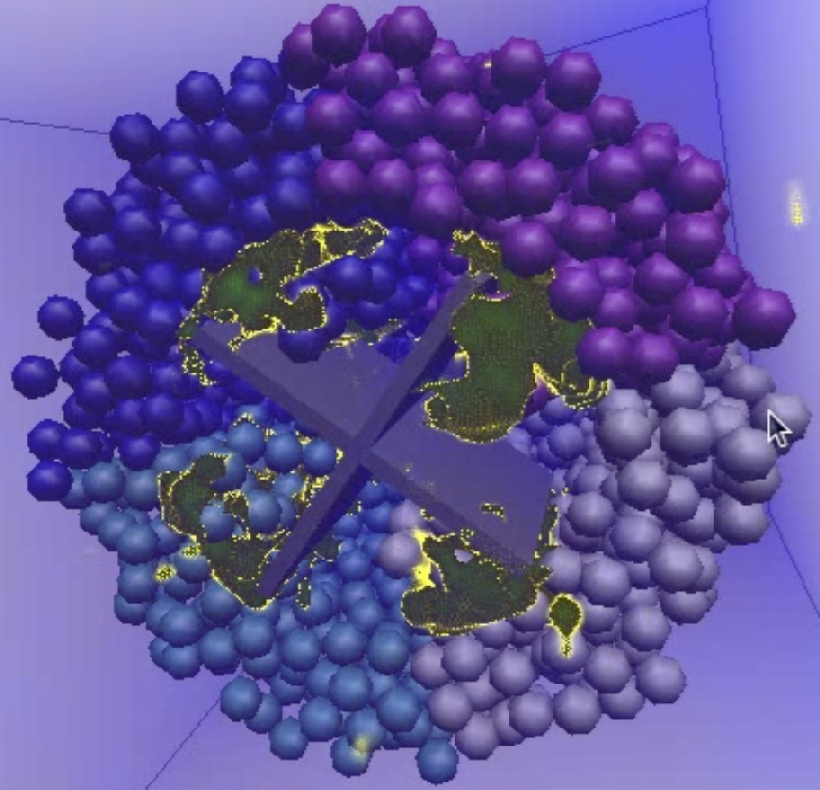

Each of the particles in a DPD model is a mesoscopic particle that represents the molecules within a small volume of the fluid. We use the term 'inclusion' to refer to the objects that are suspended in the matrix fluid. For our cement and concrete simulations, these inclusions are typical construction aggregates such as sand, gravel, or crushed rock. Each inclusion is modeled as a group of DPD particles that are locked in relative position and move as a unit. In contrast to the DPD particles that comprise an inclusion, the DPD particles that comprise the matrix fluid are called 'free' particles.

DPD methods resemble MD in that the particles move according to Newton's laws, however in DPD the inter-particle interactions are chosen to allow for much larger time steps. The original DPD algorithms used an Euler algorithm for time integration and updating the positions of the free particles, and a leap frog algorithm for updating the positions of the solid inclusions. Our simulator, QDPD, (for Quaternion-based Dissipative Particle Dynamics) is a modification of the original DPD algorithm that uses a modified velocity-Verlet algorithm [Verlet, Allen] to update the positions of both the free particles and the solid inclusions. In addition, the solid inclusion motion is managed using a quaternion based scheme [Omelayan] (hence the Q in QDPD).

For simulating suspensions comprising a non-Newtonian fluid matrix we use the computational method called Smoothed Particle Hydrodynamics (SPH) introduced in 1977 [Lucy, Gingold and Monaghan], originally developed for the simulation of astronomical bodies. This method uses mesoscopic particles in a similar way as DPD, with free particles making up the matrix fluid and each inclusion made up of a set of particles that move as a unit. Computationally, the main difference between the DPD and SPH models is the computation of the inter-particle forces. In the DPD model, the force between a pair of particles is only dependent on the distance between them and their velocities. In an SPH model, the inter-particle force computation also takes into account the particles in the immediate vicinity.

The Parallel Implementation

The QDPD program (which includes both the DPD and SPH methods) is a parallel program, that is, it is designed to run a single simulation using many processors simultaneously. QDPD is written in the common 'single program/multiple data' (SPMD) style using MPI, the well supported message passing library, to handle all of the required inter-process communications. For a simulation, we use this single program, QDPD, run simultaneously on all of the requested processors, with each processor handling a subset of the required computations and communicating with the other processors as needed to maintain a consistent state.

A simple 3-dimensional domain decomposition is used in QDPD to manage the parallel computation. Logically, the processors are arranged in a 3-dimensional grid with each processor assigned a small sub-volume of the entire simulated system, with adjacent processors within the grid owning adjacent sub-volumes.

The number of processors that we can efficiently utilize for a single simulations depends primarily on the size of the system being simulated (number of free particles and number of inclusions) as well as the speed of the processors and the speed of the interconnecting communications network between the processors. Our large simulations currently are run on 32,678 processors (cores) of the IBM Blue Gene/P machine 'Intrepid' at the 'Leadership Computing Facility' of Argonne National Laboratory with each simulation taking on the order of 100 hours to complete.

Performance

The QDPD simulator is under constant development in order to meet the needs of our research as well as to maintain good performance on the changing architectures of the machines we use for these simulations. As mentioned above, our large simulations are run on the supercomputer 'Intrepid' at the Leadership Computing Facility of Argonne National Laboratory. Intrepid is an IBM Blue Gene/P with approximately 160 thousand processor cores, 80 terabytes of main memory, and a peak performance of 557 teraflops. The QDPD simulator has been shown to run efficiently on up to 32k cores of this machine. Once we go beyond this limit we have found the performance to begin to decrease due to too little work available for each core compared to the required communications overhead. We are currently exploring the use of multi-threading as a way to extend the parallelism of QDPD.

We run our smaller simulations on local clusters, such as the Raritan cluster at NIST, which is a typical cluster of about 700, 2 to 8 core, compute nodes, running Linux and connected with a combination of Ethernet and infiniband networks. In this environment, we can run our small simulations on 512 to 1024 cores with good performance. Compared to Intrepid, each of Raritan's compute cores are faster, with more available main memory per core but with an interconnect network that is not as fast. With a larger (compute speed/communicate speed) ratio, the performance of QDPD on Raritan begins to decline at a much lower number of cores than Intrepid.

References

-

Allen, M P, and D J Tildesley. 1989. Computer simulation of liquids. Oxford science publications. Oxford University Press.

-

Gingold, R. A., and J. J. Monaghan. 1977. Smoothed Particle Hydrodynamics: Theory and Application to Non-Spherical Stars. Monthly Notices of the Royal Astronomical Society 181: 375-389.

-

Hoogerbrugge, P. J, and J. M. V. A Koelman. 1992. Simulating Microscopic Hydrodynamic Phenomena with Dissipative Particle Dynamics. Europhysics Letters (EPL) 19 (3) (June 1): 155-160.

-

Lucy, L. B. 1977. Numerical Approach to the Testing of the Fission Hypothesis. The Astronomical Journal 82 (12) (December): 1013.

-

Omelyan, Igor P. 1998. On the Numerical Integration of Motion for Rigid Polyatomics: The Modified Quaternion Approach. Computers in Physics 12 (1) (January): 97-103.

-

Verlet, Loup. 1967. Computer Experiments on Classical Fluids. I. Thermodynamical Properties of Lennard-Jones Molecules. Physical Review 159 (1) (July 5): 98-103.

Return to

High Performance Computing